Tree crown delineation using Detectree¶

Forest Modelling

Context¶

Purpose¶

Accurately delineating trees using detectron2, a library that provides state-of-the-art deep learning detection and segmentation algorithms.

Modelling approach¶

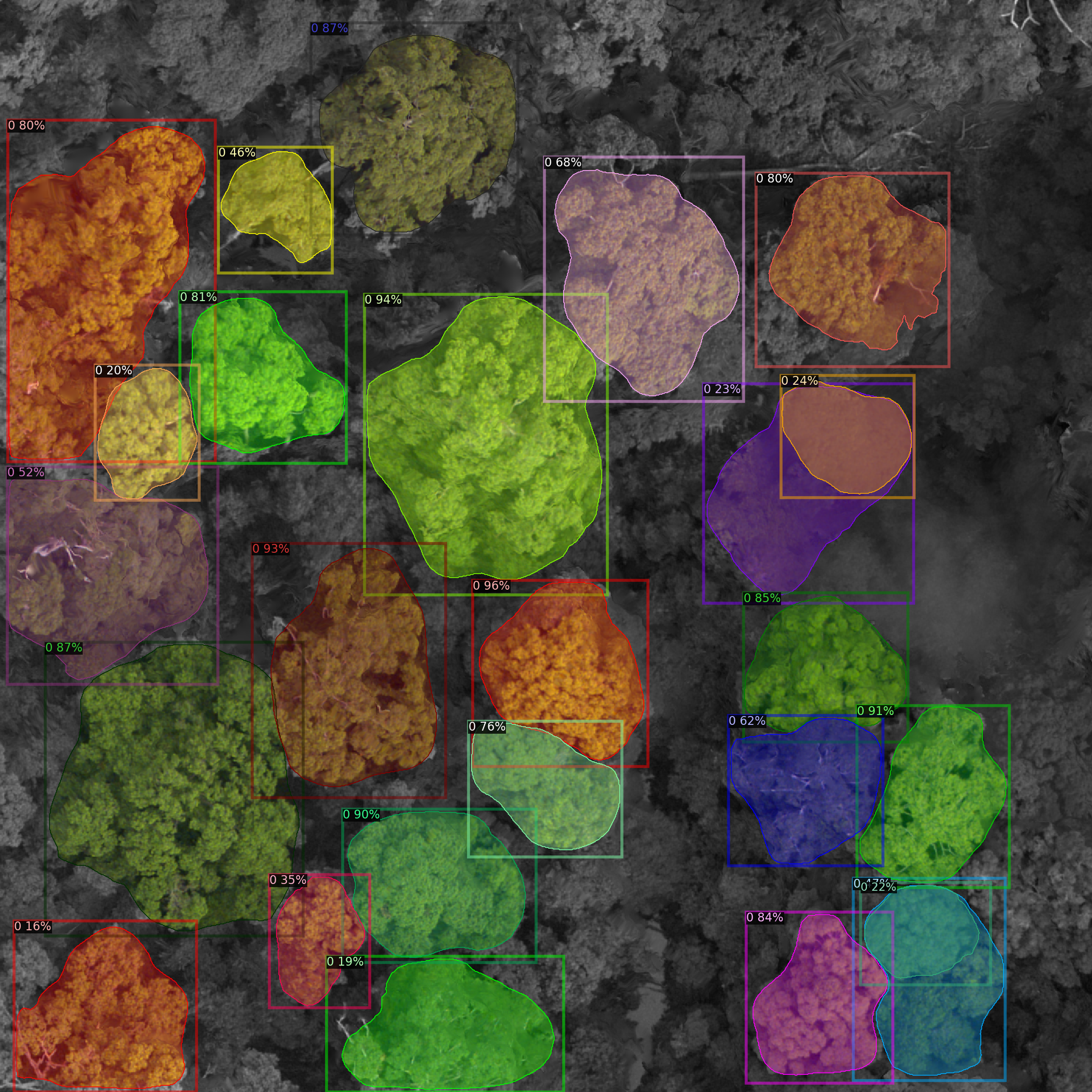

An established deep learning model, Mask R-CNN was deployed from detectron2 library to delineate tree crowns accurately. A pre-trained model, named Detectree, is provided to predict the location and extent of tree crowns from a top-down RGB image, captured by drone, aircraft or satellite. Detectree was implemented in python 3.8 using pytorch v1.7.1 and detectron2 v0.5. Further details can be found in the repository documentation.

Highlights¶

Detectree advances the state-of-the-art in tree identification from RGB images by delineating exactly the extent of the tree crown.

We demonstrate how to apply the pretrained model to a sample image fetched from a Zenodo repository.

Our pre-trained model was developed using aircraft images of tropical forests in Malaysia.

The model can be further trained using the user’s own images.

Contributions¶

Notebook¶

Sebastian H. M. Hickman (author), University of Cambridge, @shmh40 Alejandro Coca-Castro (reviewer), The Alan Turing Institute, @acocac, 27/10/21 (latest revision)

Modelling codebase¶

Modelling funding¶

The project was supported by the UKRI Centre for Doctoral Training in Application of Artificial Intelligence to the study of Environmental Risks (AI4ER) (EP/S022961/1).

Note

The authors acknowledge the authors of the Detectron2 package which provides the Mask R-CNN architecture.

Install and load libraries¶

# install pytorch

!pip -q install torch==1.8.0

!pip -q install torchvision==0.9.0

## install detectron2

!python -m pip install 'git+https://github.com/facebookresearch/detectron2.git'

## install geospatial libraries

!pip -q install geopandas==0.10.1

!pip -q install rasterio==1.2.9

!pip -q install fiona==1.8.18

!pip -q install shapely==1.7.1

## install intake plugins

!pip -q install git+https://github.com/ESM-VFC/intake_zenodo_fetcher.git

Collecting git+https://github.com/facebookresearch/detectron2.git

Cloning https://github.com/facebookresearch/detectron2.git to /private/var/folders/l8/99_59fvn4bl2szm125grkgqw0000gr/T/pip-req-build-zh131jxh

Running command git clone -q https://github.com/facebookresearch/detectron2.git /private/var/folders/l8/99_59fvn4bl2szm125grkgqw0000gr/T/pip-req-build-zh131jxh

Resolved https://github.com/facebookresearch/detectron2.git to commit 08f617cc2c9276a48e7e7dc96ae946a5df23af3f

Requirement already satisfied: Pillow>=7.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (8.2.0)

Requirement already satisfied: matplotlib in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (3.4.2)

Requirement already satisfied: pycocotools>=2.0.2 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (2.0.2)

Requirement already satisfied: termcolor>=1.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (1.1.0)

Requirement already satisfied: yacs>=0.1.8 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (0.1.8)

Requirement already satisfied: tabulate in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (0.8.9)

Requirement already satisfied: cloudpickle in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (2.0.0)

Requirement already satisfied: tqdm>4.29.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (4.62.2)

Requirement already satisfied: tensorboard in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (2.4.1)

Requirement already satisfied: fvcore<0.1.6,>=0.1.5 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (0.1.5.post20210915)

Requirement already satisfied: iopath<0.1.10,>=0.1.7 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (0.1.9)

Requirement already satisfied: future in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (0.18.2)

Requirement already satisfied: pydot in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (1.4.2)

Requirement already satisfied: omegaconf>=2.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (2.1.1)

Requirement already satisfied: hydra-core>=1.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (1.1.1)

Requirement already satisfied: black==21.4b2 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from detectron2==0.6) (21.4b2)

Requirement already satisfied: appdirs in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from black==21.4b2->detectron2==0.6) (1.4.4)

Requirement already satisfied: toml>=0.10.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from black==21.4b2->detectron2==0.6) (0.10.2)

Requirement already satisfied: mypy-extensions>=0.4.3 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from black==21.4b2->detectron2==0.6) (0.4.3)

Requirement already satisfied: click>=7.1.2 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from black==21.4b2->detectron2==0.6) (7.1.2)

Requirement already satisfied: regex>=2020.1.8 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from black==21.4b2->detectron2==0.6) (2021.8.28)

Requirement already satisfied: pathspec<1,>=0.8.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from black==21.4b2->detectron2==0.6) (0.9.0)

Requirement already satisfied: pyyaml>=5.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from fvcore<0.1.6,>=0.1.5->detectron2==0.6) (5.4.1)

Requirement already satisfied: numpy in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from fvcore<0.1.6,>=0.1.5->detectron2==0.6) (1.21.1)

Requirement already satisfied: antlr4-python3-runtime==4.8 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from hydra-core>=1.1->detectron2==0.6) (4.8)

Requirement already satisfied: importlib-resources in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from hydra-core>=1.1->detectron2==0.6) (5.2.2)

Requirement already satisfied: portalocker in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from iopath<0.1.10,>=0.1.7->detectron2==0.6) (2.3.2)

Requirement already satisfied: setuptools>=18.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from pycocotools>=2.0.2->detectron2==0.6) (58.0.4)

Requirement already satisfied: cython>=0.27.3 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from pycocotools>=2.0.2->detectron2==0.6) (0.29.24)

Requirement already satisfied: kiwisolver>=1.0.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from matplotlib->detectron2==0.6) (1.3.2)

Requirement already satisfied: python-dateutil>=2.7 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from matplotlib->detectron2==0.6) (2.8.2)

Requirement already satisfied: pyparsing>=2.2.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from matplotlib->detectron2==0.6) (2.4.7)

Requirement already satisfied: cycler>=0.10 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from matplotlib->detectron2==0.6) (0.10.0)

Requirement already satisfied: six in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from cycler>=0.10->matplotlib->detectron2==0.6) (1.16.0)

Requirement already satisfied: zipp>=3.1.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from importlib-resources->hydra-core>=1.1->detectron2==0.6) (3.5.0)

Requirement already satisfied: requests<3,>=2.21.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (2.25.1)

Requirement already satisfied: absl-py>=0.4 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (0.13.0)

Requirement already satisfied: markdown>=2.6.8 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (3.3.4)

Requirement already satisfied: grpcio>=1.24.3 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (1.39.0)

Requirement already satisfied: protobuf>=3.6.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (3.17.3)

Requirement already satisfied: werkzeug>=0.11.15 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (2.0.1)

Requirement already satisfied: wheel>=0.26 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (0.37.0)

Requirement already satisfied: tensorboard-plugin-wit>=1.6.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (1.8.0)

Requirement already satisfied: google-auth-oauthlib<0.5,>=0.4.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (0.4.6)

Requirement already satisfied: google-auth<2,>=1.6.3 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from tensorboard->detectron2==0.6) (1.35.0)

Requirement already satisfied: rsa<5,>=3.1.4 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from google-auth<2,>=1.6.3->tensorboard->detectron2==0.6) (4.7.2)

Requirement already satisfied: cachetools<5.0,>=2.0.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from google-auth<2,>=1.6.3->tensorboard->detectron2==0.6) (4.2.2)

Requirement already satisfied: pyasn1-modules>=0.2.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from google-auth<2,>=1.6.3->tensorboard->detectron2==0.6) (0.2.8)

Requirement already satisfied: requests-oauthlib>=0.7.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from google-auth-oauthlib<0.5,>=0.4.1->tensorboard->detectron2==0.6) (1.3.0)

Requirement already satisfied: pyasn1<0.5.0,>=0.4.6 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from pyasn1-modules>=0.2.1->google-auth<2,>=1.6.3->tensorboard->detectron2==0.6) (0.4.8)

Requirement already satisfied: certifi>=2017.4.17 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from requests<3,>=2.21.0->tensorboard->detectron2==0.6) (2021.10.8)

Requirement already satisfied: idna<3,>=2.5 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from requests<3,>=2.21.0->tensorboard->detectron2==0.6) (2.10)

Requirement already satisfied: chardet<5,>=3.0.2 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from requests<3,>=2.21.0->tensorboard->detectron2==0.6) (4.0.0)

Requirement already satisfied: urllib3<1.27,>=1.21.1 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from requests<3,>=2.21.0->tensorboard->detectron2==0.6) (1.26.6)

Requirement already satisfied: oauthlib>=3.0.0 in /Users/acoca/anaconda3/envs/envai-book/lib/python3.8/site-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<0.5,>=0.4.1->tensorboard->detectron2==0.6) (3.1.1)

import cv2

from PIL import Image

import os

import numpy as np

import urllib.request

import glob

# intake library and plugin

import intake

from intake_zenodo_fetcher import download_zenodo_files_for_entry

# geospatial libraries

import geopandas as gpd

from rasterio.transform import from_origin

import rasterio.features

import fiona

from shapely.geometry import shape, mapping, box

from shapely.geometry.multipolygon import MultiPolygon

# machine learning libraries

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.utils.visualizer import Visualizer, ColorMode

from detectron2.config import get_cfg

from detectron2.engine import DefaultTrainer

# visualisation

import holoviews as hv

from IPython.display import display

import geoviews.tile_sources as gts

import hvplot.pandas

import hvplot.xarray

hv.extension('bokeh', width=100)

Set folder structure¶

# Define the project main folder

data_folder = './forest-modelling-detectree'

# Set the folder structure

config = {

'in_geotiff': os.path.join(data_folder, 'input','tiff'),

'in_png': os.path.join(data_folder, 'input','png'),

'model': os.path.join(data_folder, 'model'),

'out_geotiff': os.path.join(data_folder, 'output','raster'),

'out_shapefile': os.path.join(data_folder, 'output','vector'),

}

# List comprehension for the folder structure code

[os.makedirs(val) for key, val in config.items() if not os.path.exists(val)]

[None, None, None, None, None]

Load and prepare input image¶

Fetch a GeoTIFF file of aerial forest imagery using intake¶

Let’s fetch a sample aerial image from a Zenodo repository.

# write a catalog YAML file

catalog_file = os.path.join(data_folder, 'catalog.yaml')

with open(catalog_file, 'w') as f:

f.write('''

sources:

sepilok_rgb:

driver: rasterio

description: 'NERC RGB images of Sepilok, Sabah, Malaysia (collection)'

metadata:

zenodo_doi: "10.5281/zenodo.5494629"

args:

urlpath: "{{ CATALOG_DIR }}/input/tiff/Sep_2014_RGB_602500_646600.tif"

''')

cat_tc = intake.open_catalog(catalog_file)

for catalog_entry in list(cat_tc):

download_zenodo_files_for_entry(

cat_tc[catalog_entry],

force_download=False

)

will download https://zenodo.org/api/files/271e78b4-b605-4731-a127-bd097e639bf8/Sep_2014_RGB_602500_646600.tif to /Users/acoca/OneDrive - The Alan Turing Institute/Documents/projects/envsensors/wp3/repos/environmental-ai-book/book/forest/modelling/forest-modelling-detectree/input/tiff/Sep_2014_RGB_602500_646600.tif

tc_rgb = cat_tc["sepilok_rgb"].to_dask()

Inspect the aerial image¶

Let’s investigate the data-array, what is the shape? Bounds? Bands? CRS?

print('shape =', tc_rgb.shape,',', 'and number of bands =', tc_rgb.count, ', crs =', tc_rgb.crs)

shape = (4, 1400, 1400) , and number of bands = <bound method ImplementsArrayReduce._reduce_method.<locals>.wrapped_func of <xarray.DataArray (band: 4, y: 1400, x: 1400)>

array([[[36166.285 , 34107.22 , ..., 20260.998 , 11166.631 ],

[32514.84 , 28165.994 , ..., 24376.36 , 21131.947 ],

...,

[15429.493 , 16034.794 , ..., 19893.691 , 19647.646 ],

[12534.722 , 14003.215 , ..., 21438.908 , 22092.525 ]],

[[38177.168 , 36530.74 , ..., 19060.268 , 11169.006 ],

[34625.227 , 30270.379 , ..., 21760.09 , 20796.621 ],

...,

[17757.678 , 16818.102 , ..., 22538.023 , 23093.508 ],

[13403.302 , 13354.489 , ..., 24638.21 , 25545.938 ]],

[[13849.501 , 14158.603 , ..., 9385.764 , 7401.662 ],

[13252.31 , 13373.801 , ..., 12217.845 , 10666.252 ],

...,

[13471.741 , 11533.697 , ..., 7536.6924, 8397.009 ],

[13724.59 , 11057.722 , ..., 9778.8125, 11174.72 ]],

[[65535. , 65535. , ..., 65535. , 65535. ],

[65535. , 65535. , ..., 65535. , 65535. ],

...,

[65535. , 65535. , ..., 65535. , 65535. ],

[65535. , 65535. , ..., 65535. , 65535. ]]], dtype=float32)

Coordinates:

* band (band) int64 1 2 3 4

* y (y) float64 6.467e+05 6.467e+05 6.467e+05 ... 6.466e+05 6.466e+05

* x (x) float64 6.025e+05 6.025e+05 6.025e+05 ... 6.026e+05 6.026e+05

Attributes:

transform: (0.1, 0.0, 602480.0, 0.0, -0.1, 646720.0)

crs: +init=epsg:32650

res: (0.1, 0.1)

is_tiled: 0

nodatavals: (-3.3999999521443642e+38, -3.3999999521443642e+38, -3.399...

scales: (1.0, 1.0, 1.0, 1.0)

offsets: (0.0, 0.0, 0.0, 0.0)

AREA_OR_POINT: Area> , crs = +init=epsg:32650

Save the RGB bands of the GeoTIFF file as a PNG¶

Mask R-CNN requires images in png format. Let’s export the RGB bands to a png file.

minx = 602500

miny = 646600

R = tc_rgb[0]

G = tc_rgb[1]

B = tc_rgb[2]

# stack up the bands in an order appropriate for saving with cv2, then rescale to the correct 0-255 range for cv2

# you will have to change the rescaling depending on the values of your tiff!

rgb = np.dstack((R,G,B)) # BGR for cv2

rgb_rescaled = 255*rgb/65535 # scale to image

# save this as png, named with the origin of the specific tile - change the filepath!

filepath = config['in_png'] + '/' + 'tile_' + str(minx) + '_' + str(miny) + '.png'

cv2.imwrite(filepath, rgb_rescaled)

True

Read in and display the PNG file¶

im = cv2.imread(filepath)

display(Image.fromarray(im))

Modelling¶

Download the pretrained model¶

# define the URL to retrieve the model

fn = 'model_final.pth'

url = f'https://zenodo.org/record/5515408/files/{fn}?download=1'

urllib.request.urlretrieve(url, config['model'] + '/' + fn)

('./forest-modelling-detectree/model/model_final.pth',

<http.client.HTTPMessage at 0x7fcb9426cb50>)

Settings of detectron2 config¶

The following lines allow configuring the main settings for predictions and load them into a DefaultPredictor object.

cfg = get_cfg()

# if you want to make predictions using a CPU, run the following line. If using GPU, hash it out.

cfg.MODEL.DEVICE='cpu'

# model and hyperparameter selection

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_101_FPN_3x.yaml"))

cfg.DATALOADER.NUM_WORKERS = 2

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1

### path to the saved pre-trained model weights

cfg.MODEL.WEIGHTS = config['model'] + '/model_final.pth'

# set confidence threshold at which we predict

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.15

#### Settings for predictions using detectron config

predictor = DefaultPredictor(cfg)

Outputs¶

Showing the predictions from detectree¶

outputs = predictor(im)

v = Visualizer(im[:, :, ::-1], scale=1.5, instance_mode=ColorMode.IMAGE_BW) # remove the colors of unsegmented pixels

v = v.draw_instance_predictions(outputs["instances"].to("cpu"))

image = cv2.cvtColor(v.get_image()[:, :, :], cv2.COLOR_BGR2RGB)

display(Image.fromarray(image))

Convert predictions into geospatial files¶

To GeoTIFF¶

mask_array = outputs['instances'].pred_masks.cpu().numpy()

# get confidence scores too

mask_array_scores = outputs['instances'].scores.cpu().numpy()

num_instances = mask_array.shape[0]

mask_array_instance = []

output = np.zeros_like(mask_array)

mask_array_instance.append(mask_array)

output = np.where(mask_array_instance[0] == True, 255, output)

fresh_output = output.astype(np.float)

x_scaling = 140/fresh_output.shape[1]

y_scaling = 140/fresh_output.shape[2]

# this is an affine transform. This needs to be altered significantly.

transform = from_origin(int(filepath[-17:-11])-20, int(filepath[-10:-4])+120, y_scaling, x_scaling)

output_raster = config['out_geotiff'] + '/' + 'predicted_rasters_' + filepath[-17:-4]+ '.tif'

new_dataset = rasterio.open(output_raster, 'w', driver='GTiff',

height = fresh_output.shape[1], width = fresh_output.shape[2], count = fresh_output.shape[0],

dtype=str(fresh_output.dtype),

crs='+proj=utm +zone=50 +datum=WGS84 +units=m +no_defs',

transform=transform)

new_dataset.write(fresh_output)

new_dataset.close()

/var/folders/l8/99_59fvn4bl2szm125grkgqw0000gr/T/ipykernel_2296/3394513491.py:12: DeprecationWarning: `np.float` is a deprecated alias for the builtin `float`. To silence this warning, use `float` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.float64` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

fresh_output = output.astype(np.float)

To shapefile¶

# Read input band with Rasterio

with rasterio.open(output_raster) as src:

shp_schema = {'geometry': 'MultiPolygon','properties': {'pixelvalue': 'int', 'score': 'float'}}

crs = src.crs

for i in range(src.count):

src_band = src.read(i+1)

src_band = np.float32(src_band)

conf = mask_array_scores[i]

# Keep track of unique pixel values in the input band

unique_values = np.unique(src_band)

# Polygonize with Rasterio. `shapes()` returns an iterable

# of (geom, value) as tuples

shapes = list(rasterio.features.shapes(src_band, transform=src.transform))

if i == 0:

with fiona.open(config['out_shapefile'] + '/predicted_polygons_' + filepath[-17:-4] + '_' + str(0) + '.shp', 'w', 'ESRI Shapefile',

shp_schema) as shp:

polygons = [shape(geom) for geom, value in shapes if value == 255.0]

multipolygon = MultiPolygon(polygons)

# simplify not needed here

#multipolygon = multipolygon_a.simplify(0.1, preserve_topology=False)

shp.write({

'geometry': mapping(multipolygon),

'properties': {'pixelvalue': int(unique_values[1]), 'score': float(conf)}

})

else:

with fiona.open(config['out_shapefile'] + '/predicted_polygons_' + filepath[-17:-4] + '_' + str(0)+'.shp', 'a', 'ESRI Shapefile',

shp_schema) as shp:

polygons = [shape(geom) for geom, value in shapes if value == 255.0]

multipolygon = MultiPolygon(polygons)

# simplify not needed here

#multipolygon = multipolygon_a.simplify(0.1, preserve_topology=False)

shp.write({

'geometry': mapping(multipolygon),

'properties': {'pixelvalue': int(unique_values[1]), 'score': float(conf)}

})

Interactive map to visualise the exported files¶

Plot tree crown delineation predictions and scores¶

# load and plot polygons

in_shp = glob.glob(config['out_shapefile'] + '/*.shp')

poly_df = gpd.read_file(in_shp[0])

plot_vector = poly_df.hvplot(hover_cols=['score'], legend=False).opts(fill_color=None,line_color=None,alpha=0.5, width=800, height=600)

plot_vector

Plot the exported GeoTIFF file¶

# load and plot RGB image

r = tc_rgb.sel(band=[1,2,3])

normalized = r/(r.quantile(.99,skipna=True)/255)

mask = normalized.where(normalized < 255)

int_arr = mask.astype(int)

plot_rgb = int_arr.astype('uint8').hvplot.rgb(

x='x', y='y', bands='band', data_aspect=0.8, hover=False, legend=False, rasterize=True

)

Overlay the prediction labels and image¶

Note we have some artifacts in the RGB image due to the transformations using the normalization procedure.

plot_rgb * plot_vector

OMP: Info #271: omp_set_nested routine deprecated, please use omp_set_max_active_levels instead.

Save plot¶

combined_plot = plot_rgb * plot_vector

hvplot.save(combined_plot, data_folder+'/combined_plot.html')

Summary¶

We have read in a raster, chosen a tile and made predictions on it. These predictions can then be transformed to shapefiles and examined in GIS software!

We made the predictions on the

pngusing a pretrained Mask R-CNN model,detectree.The outputs showed the model capability to detect and delineate tree crowns from aerial imagery.

We then extracted our predictions, added the geospatial location back in, and exported them as shapefiles including the confidence score assigned to each prediction by the model.

Visualised the predictions on an interactive map!